How Does Kafka Log Compaction Work?

What is Log Compaction?

Log Compaction is Kafka's intelligent way of managing data retention. Instead of simply deleting old messages, it keeps the most recent value for each message key while removing outdated values. This approach is especially valuable when you need to maintain the current state of your data, such as with database changes or configuration settings.

How Log Compaction Works

1. Log Storage Structure

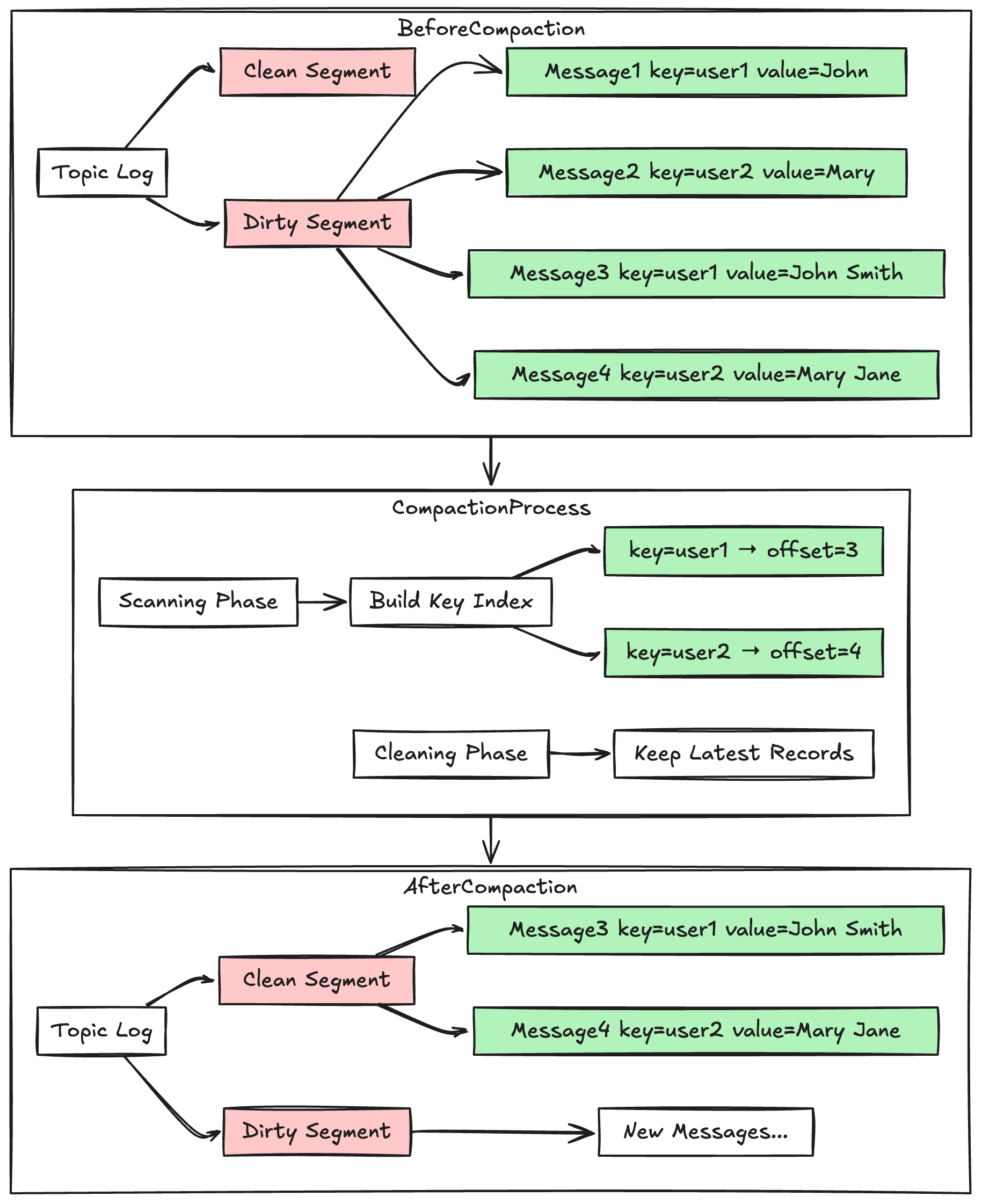

Kafka divides the log into two segments:

- Clean Segment: Data that has been compacted

- Dirty Segment: New data waiting for compaction

2. Compaction Process

The compaction process consists of two main phases:

-

Scanning Phase:

- Scans through all messages in the Dirty segment

- Creates an index of message keys and their latest positions

-

Cleaning Phase:

- Preserves only the most recent record for each key

- Removes outdated duplicate records

- Maintains the original message sequence

3. Compaction Triggers

Compaction kicks in when:

- Uncompacted data ratio exceeds threshold

- Scheduled time interval is reached

- Manual compaction is triggered

How to Configure Log Compaction?

Here's how to set up log compaction:

# Enable log compaction

log.cleanup.policy=compact

# Set compaction check interval

log.cleaner.backoff.ms=30000

# Set compaction trigger threshold

log.cleaner.min.cleanable.ratio=0.5

# Set compaction thread count

log.cleaner.threads=1

Use Cases

Log compaction is best suited for the following scenarios:

1. Database Change Records

Example of user information updates:

- Initial record:

key=1001, value=John - Update record:

key=1001, value=John Smith - After compaction:

key=1001, value=John Smith

2. System Configuration Management

Example of connection settings:

- Initial config:

key=max_connections, value=100 - Updated config:

key=max_connections, value=200 - After compaction:

key=max_connections, value=200

3. State Data Storage

- Maintain latest entity states

- Save storage space

Important Considerations

When using log compaction, keep these points in mind:

-

Messages Must Have Keys

- Only messages with keys can be compacted

- Keyless messages will remain untouched

-

Impact on System Performance

- Compaction process consumes system resources

- Configure parameters appropriately

-

Message Order Guarantees

- Messages with the same key stay in order

- Ordering between different keys isn't guaranteed

Summary

Kafka's log compaction offers a smart way to manage our data retention needs. It's perfect for cases where we only need the latest state of your data, helping you save storage space while keeping your data accessible. When properly configured, it can significantly improve our Kafka cluster's efficiency.

Related Topics: