What is a Kafka Consumer Group?

What is a Consumer Group?

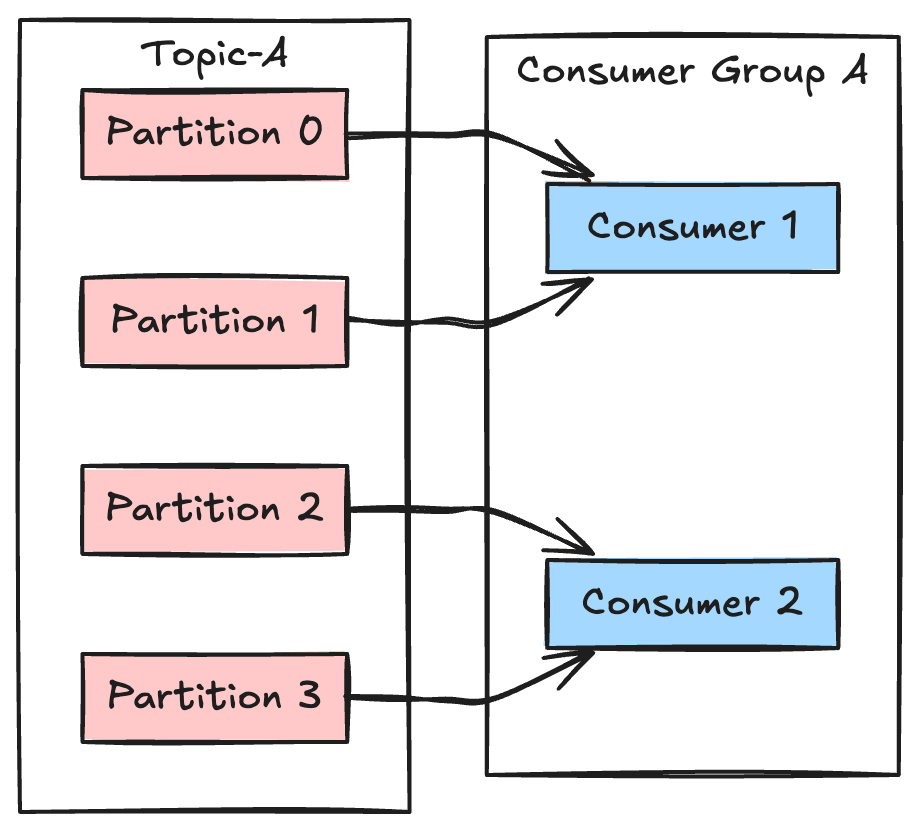

A Consumer Group is Kafka's mechanism for organizing consumers to collectively process messages from topics. It enables multiple Consumer instances to work together, providing horizontal scalability while ensuring partition-level ordering guarantees.

Basic Concept

Key Features of Consumer Groups

1. Consumption Division

- Each partition is consumed by only one consumer within a group

- A single consumer can handle multiple partitions

- Automatic load balancing between group members

2. Consumer Offset Management

Consumption Progress Tracking:

├── Auto-commit: enable.auto.commit=true

└── Manual commit: enable.auto.commit=false

3. Consumer Group Isolation

Different Consumer Groups operate independently:

Topic-A

├── Consumer Group 1: Order Processing

└── Consumer Group 2: Order Analytics

Real-World Use Cases

1. Message Broadcasting

One message needs processing by multiple systems:

├── Group 1: Order System

├── Group 2: Logistics System

└── Group 3: Analytics System

2. Load Balancing

When single consumer capacity is insufficient:

Topic: "User Registration"

├── Consumer 1: Processes 25% of messages

├── Consumer 2: Processes 25% of messages

├── Consumer 3: Processes 25% of messages

└── Consumer 4: Processes 25% of messages

Consumer Group Configuration Best Practices

1. Basic Configuration

# Consumer Group ID

group.id=order-processing-group

# Commit mode

enable.auto.commit=false

# Session timeout

session.timeout.ms=10000

# Heartbeat interval

heartbeat.interval.ms=3000

2. Consumer Count Planning

Here are the key principles for configuring Consumer count:

Minimum

- At least 1 Consumer is required

- Ensures coverage of all partitions

Maximum

- Should not exceed the total partition count

- Additional Consumers will remain idle

- Results in wasted system resources

Recommended

- Formula: Partitions ÷ Single Consumer capacity

- Adjust according to actual load

- Maintain a 30% capacity buffer

3. Consumer Offset Commit Strategy

Consumer Offset is a crucial mechanism for tracking consumption progress. Each Consumer must periodically commit its consumption position (offset) to Kafka to ensure correct recovery after restarts or failures.

Two main commit strategies:

- Auto-commit: Handled automatically by Kafka client, simple but may lose messages

- Manual commit: Developer controls commit timing, higher reliability

Example of manual commit:

// Manual commit example

while (true) {

// Poll messages with 100ms timeout

ConsumerRecords<String, String> records = consumer.poll(Duration.ofMillis(100));

for (ConsumerRecord<String, String> record : records) {

// Process message

processMessage(record);

}

// Manual commit after batch processing

// commitSync() blocks until commit succeeds or fails

consumer.commitSync();

}

Code explanation:

- Use

poll()to batch fetch messages - Process each message in loop

- Commit offset only after all messages are processed

- Use

commitSync()for guaranteed commit results

This approach, while slightly slower, ensures no message loss and is suitable for scenarios requiring high data reliability.

Common Issues and Solutions

1. Too Many Consumers

Issue: Having more Consumers than partitions leads to resource waste.

Solutions:

- Maintain Consumer count at or below partition count

- If higher parallelism is needed, consider adding partitions

- Assess actual processing capacity requirements

2. Consumption Skew

Problem: Some Consumers are overloaded while others are idle.

Solutions:

- Review partition assignment strategy

- Consider adding partitions for finer-grained load balancing

- Optimize message key distribution to avoid hot partitions

- Monitor Consumer capacity and load

3. Duplicate Processing

Problem: Messages are processed multiple times, affecting business logic.

Solutions:

- Implement message idempotency

- Use manual offset commit strategy

- Set appropriate commit intervals

- Implement business-level deduplication

Summary

Consumer Groups are a key mechanism for Kafka's scalability and fault tolerance. Through proper configuration and usage of Consumer Groups, we can build more reliable message processing systems.

Related Topics: